Motivation for Docker:

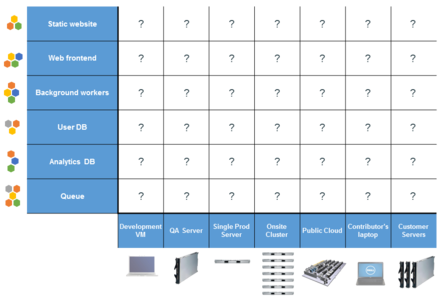

Docker vs Virtual Machine (NeRSC Shifter slide 13):

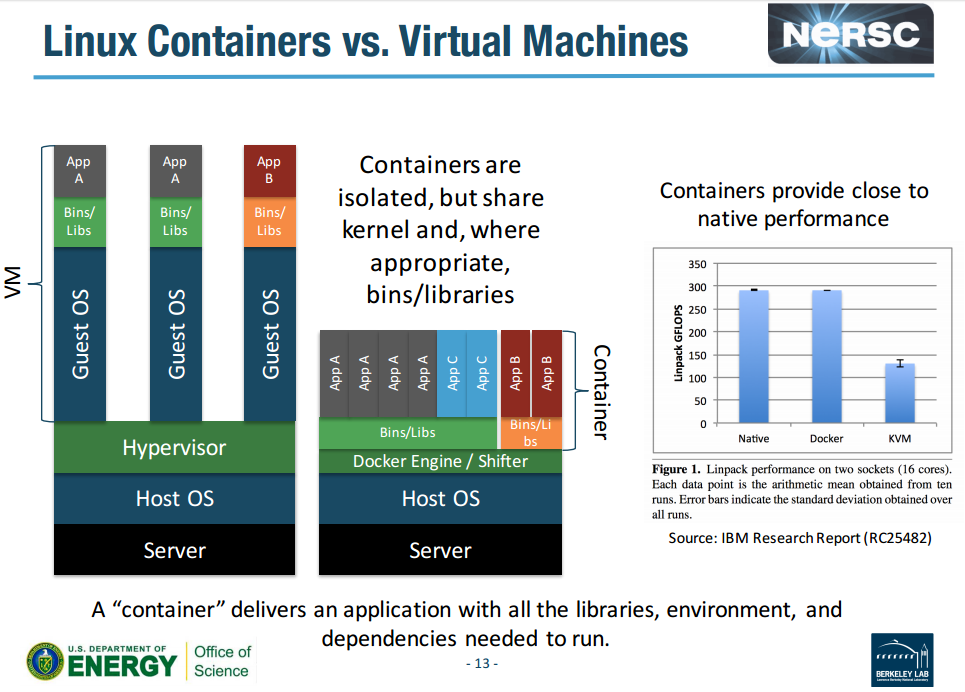

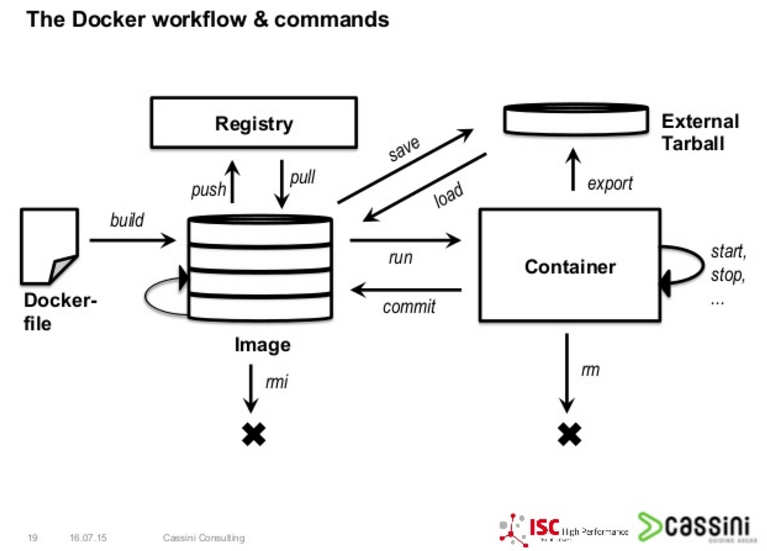

Docker architecture:

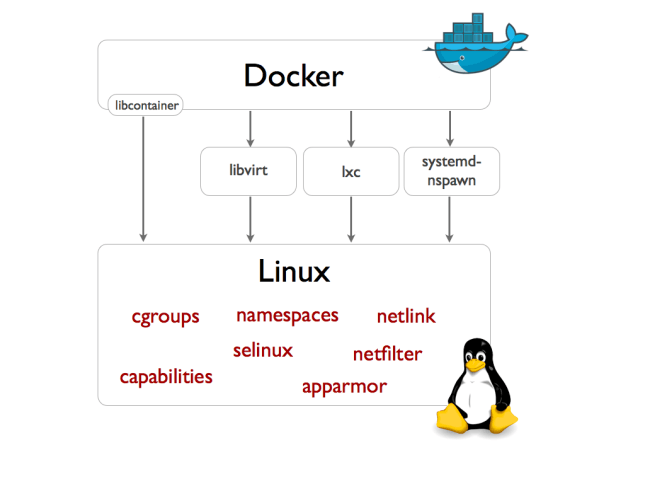

Under the hood:

- Container isolation is provided by Linux kernel Namespace.

- Resource restriction (cpu, memory) is governed by CGroups.

- UnionFS provides a unified file system inside the container, even when there are multiple pieces mounted in overlapping fashion. Several implementation exist, eg AUFS, btrfs, DeviceMapper, etc

- A container format is a wrapper around the components above. Modern docker use libcontainer. Older system used LXC, libvirt, etc.

Docker traits

- Container provides instant application portability.

- Container is kernel virtualization (VM is hardware virtualization).

- Docker creates a thin compartamentalization between apps, calling them containers.

- Think of Docker as Solaris Container/Zone, AIX WPAR, FreeBSD jail or even a glorified chroot.

- A container uses the host OS kernel, and bin/lib if possible.

- Container is similar to KVM, where virtual guest machine uses the host kernel and bin. But container is more lightweight than KVM.

- cgroup is used to enforce resource quota for each container

- a container CAN have diff bin and lib than the host, such container would be much fatter. But it is possible to have a host OS running CoreOS and a container using Ubuntu.

- namespace

- Lots of little apps... So, multiple dockers, each hosting an app, are run to satisfy a specific "suite".

- Wordpress container rely on a separate MySQL container to host its files.

- Both need to rely on OS... ? An OS docker is needed to ... ?? Does not run docker inside another docker.

Cleaning up

docker image prune docker image prune -a docker system prune # this one clean up a lot more! # remove: - all stopped containers - all networks not used by at least one container - all dangling images - unused ... # if data is saved in the container, this would wipe it! # clear out container created before dec 31, 1:55pm docker container prune --filter "until=2023-12-31T13:55:00" docker volume list | wc docker volume prune systemctl stop docker rm -rf /var/lib/docker # docker starting will recreate everything # but if data saved in local container, they would be gone!

Ecosystem, Competitor

- Kubernetes

- Mesos

- Vagrant

- ClusterHQ

- CoreOS

- OpenVC

- LXC = namespace + cgroup

- Rocket (rkt), a newer, open source container/pod contender.

- systemd-nspawn, AppArmor rkt, runC,

- AUFS, Copy on Write

Docker Hub

Docker images can be placed in a central repository. The "app store" equivalent for docker is at hub.docker.com.This Pocket Survival Guide web site is served by an apache container with all the necessary web content in https://hub.docker.com/r/tin6150/apache_psg3/

No account is needed to pull docker images from the hub.

Account IS needed to post image to the hub.

Docker and RHEL7

- Docker leverages new kernel features, devicemapper (thin provisioning, direct lvm), sVirt, systemd

- RH don't recommend use of docker with RHEL6 and don't ship rpm for it.

- RHEL6.5 with kernel 2.6.32+ supported early docker implementation, but not recommended nowadays.

- EPEL provides docker-io.rpm in RHEL 6, but it wants kernel 3.8.0 and above, so point to use RHEL7 again. (note the docker.rpm is is unrelated, it is for a GUI applet thingy).

Docker and Windows wsl

At least for WSL Ubuntu 22.04 , docker (without docker desktop) works for basic functionality. But I noticed piping will cause docker to spin, drive up CPU, and never exit (as of 2024.04.17), hopefully bug that will get work out soon.eg: sudo docker run -it --rm --entrypoint=/bin/pip ghcr.io/tin6150/python:main list # this works sudo docker run -it --rm --entrypoint=/bin/pip ghcr.io/tin6150/python:main list | grep geopanda # this spinsNot exactly dind (docker-in-docker), but pass thru, but this works even in wsl docker run -it --rm --entrypoint=/bin/sh -v /var/run/docker.sock:/var/run/docker.sock docker:latest

Docker and MacOS with Banana Silicon

Stack Overflow says to use:softwareupdate --install-rosetta

Docker and CoreOS

- CoreOS is much thinner than traditional Linux distro.

- Built intend to host many docker containers.

Config

HTTPS_PROXY # said to look at this env var for proxy /etc/sysconfig/docker # docker daemon config for RHEL/Fedora # may need to config proxy here. /etc/default/docker # docker daemon config for ubuntu /var/log/docker /var/lib/docker # cache of container images, many run time stuff.

Installation

### installation in ubuntu sudo apt-get install docker.io # "docker" without .io is for some gui docklet applet. sudo service docker start # start docker daemon docker search httpd # to allow non root to run docker command, add the user to the docker group. /etc/default/docker # docker daemon config for ubuntu ### installation on amazon linux ### http://docs.aws.amazon.com/AmazonECS/latest/developerguide/docker-basics.html sudo yum install -y docker sudo service docker start # start docker daemon sudo usermod -a -G docker ec2-user # to allow non root to run docker command, add the user to the docker group. # docker commands are often run under the os-level user rather than root. ### installation on rhel7 sudo yum install docker # (deps: docker-selinux device-mapper, lvm2). There are other optional tools sudo service docker start sudo usermod -a -G Dockerroot bofh # other setup maybe needed... docker command still don't run as bofh ... maybe selinux stuff? sudo docker run httpd # will download httpd container if not already present /etc/sysconfig/docker # docker daemon config for RHEL/Fedora # may need to config proxy here.

Quick test

docker run --rm hello-world docker run --rm --gpus all nvidia/cuda:10.2-base nvidia-smi docker run --rm --gpus all nvidia/cuda:11.0-base nvidia-smiTo relay image from public docker to an internally hosted registry server on host/port "registry:443"

docker tag hello-world registry:443/hello-world docker image push registry:443/hello-world docker run --rm registry:443/hello-world docker tag nvidia/cuda:10.2-base registry:443/cuda:10.2-base docker image push registry:443/cuda:10.2-base docker run --rm --gpus all registry:443/cuda:10.2-base

Basic Commands

docker search http # look for container in hub.docker.com

docker pull httpd:latest # download the httpd image from hub.docker.com. get the "latest" version.

# stored in??

docker images

docker run -p 80:80 httpd # run the httpd docker image, mapping port 80 on the container to port 80 on the host

# if image not already pull-ed, docker run will pull it automatically

docker run -p 8000:80 httpd & # run the httpd docker image, mapping port 80 on the container to port 8000 on the host,

# putting docker in background so get back the prompt.

# two instanced can run as above, resulting in service running in parallel

# netstat -an | grep LISTEN will show that both port 80 and 8000 are in LISTEN state

# note that the httpd process is visible on the host.

# it is NOT like in a VM that run all its process in a black box.

docker run -P -d training/webapp python app.py # this run a demo web app called app.py

# -P will map all ports in container to host.

# but it maps sequentially starting from 32768

# -d is daemon mode, ie, put process in background.

docker ps # list running docker process, container id, name, port mappings, etc

docker ps -l # list last continer that was started

docker ps -a # list all containers, including stopped one

docker logs c7f46acc532b # get console output of the specified container id

podman logs -tail 100 c7f46acc532b

podman logs -f --since 5m c7f46acc532b # --follow=true, last 5 min of console output

docker stop c7f46acc532b # stop the specified container id (container nickname can be used instead)

docker start c7f46acc532b # restart the specified container id (assuming image hasn't deleted)

docker exec -it c7f46acc532b bash # drop into a bash shell inside a running container

docker exec uranus_hertz df -h # run the "df -h" command inside the named container

docker port c7f46acc532b # show port mapping of a container to its host (similar to former docker network ls)

docker top uranus_hertz # see the process running inside the specified container

docker pull rhel7:latest # get a basic, off-the-shelf container for redhat 7 (eg from rh cert guide)

docker info # display info on existing container, images, space util, etc.

docker inspect httpd # get lots of details about the container. output in JSON

# eg data volumes, (namespace?) mappings,

docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' uranus_hertz # only retrieve specific "inspection" item

docker images -a # list images (from docker pull). Note that a container is an execution instance, reading info from an image.

docker ps -a # list containers (process, -a include stopped containers) (note diff vs image, which is more like a source file)

docker rm ContainerName # remove container (process listed by docker ps -a)

docker rmi ImageName # remove image (files listed by docker image -a)

# images files from docker pull. container are instance of image.

docker commit clever_shockley rhel7box2 # save changes of a running container to a new named image called "rhel7box2"

docker commit -m "msg desc" # -m add a message about the commit (description for the new image)

docker commit -a "author name" # -a add the authoer's name

docker tag ... # tag/name (?container as image) for docker push to hub.docker.com

docker login # login to docker hub. credentials will be saved as base64 enconding in ~/.docker/config.json (ie, NOT encrypted!)

docker info # version, disk usage, metadata size, etc

man docker # docker damon and general instructions

man docker run # specific man page for the run command of docker

# (not sure how space is handled by man, but it does!)

docker --help

docker run --help # help specific to the sub command.

Under the hood

Process Isolation

- Each container has its own process tree, with its "seed" process (eg httpd) as PID 1.

- Achieved using Namespace.

- Parent is aware of all child process, but child don't know anything about parent -- security.

- Parent see child process, and each child process really has 2 PIDs.

Network Isolation

- Each container has its own network stack, NIC, ARP space, IP space, routing tables,

- Achieved using ip netns

- iptables, brctl, virtual switches

- Docker provides network isolation, but no throtling

- 3 network_mode:

- bridged (default if not specified)

- Virtual ethernet interface is used (veth), called docker0

- All containers on docker0 can communicate with one another by default (icc=true), ie no isolation between these containers.

- use -p 8000:80 to map host's port 8000 to container's port 80.

- performance not as good as host mode network. Some blog says bridge mode get 80% of host mode network.

- some argues that bridge is more portable and easier to scale in different environment

- host (--net=host)

- container use host network stack, thus no isolation

- allow access to D-Bus, unexpected behavior.

- may open container to wider workd, security implications.

- shared

- An existing container network stack is shaerd with other containers. ie Network Namespace is shared.

- like bridged, FS and Process isolation remains

- bridged (default if not specified)

- Docker will manipulate iptables, bypass UFW (in bridge mode). if want to protect container from general access, see below.

Example network config and its effect. docker_compose.yml that starts grafana that utilize tcp/3000 network_mode ports (mapping) docker ps PORTS netstat -an Comment ----------------------------- ----------------- ----------------------- ------------------------------ ---------------------------------------------- (default, ie not spelled out) not spelled out 3000/tcp (nothing) 3000 not accessible from host (outside docker) (default, ie not spelled out) 3000 0.0.0.0:3000->3000/tcp tcp6 0 0 :::3000 :::* LISTEN bridge 3000 0.0.0.0:3000->3000/tcp tcp6 0 0 :::3000 :::* LISTEN ie, same as default, expected host not spelled out (blank) tcp6 0 0 :::3000 :::* LISTEN 3000 accessible from host (governed by ufw?) host 3333:3000 (blank) tcp6 0 0 :::3000 :::* LISTEN port mapping actually ignored in host mode, host connection to 3333 is rejected

Docker containers behind iptables

docker by default create iptables in the PREROUTING level, thereby often bypassing host's iptables/FirewallD .To firewall of docker containers that use iptables (but not nftable?) one can add "raw" rules into DOCKER_USER chain.

see docker w/ firewalld

example below will block port 443 from general internet access, but only allow those coming from cloudflare.

# cat /etc/firewalld/direct.xml-i enp0s3 -p tcp -m multiport --dports 80,443 -s 0.0.0.0/0 -j REJECT -i enp0s3 -p tcp -m multiport --dports 80,443 -s 103.21.244.0/22,103.22.200.0/22,103.31.4.0/22,104.16.0.0/13,104.24.0.0/14,108.162.192.0/18,131.0.72.0/22,141. 101.64.0/18,162.158.0.0/15,172.64.0.0/13,173.245.48.0/20,188.114.96.0/20,190.93.240.0/20,197.234.240.0/22,198.41.128.0/17 -j ACCEPT

Docker containers behind UFW

docker by default create iptables in the PREROUTING level, thereby often bypassing host's FirewallD or UFW rules. see firewall#docker. Disabling docker manipulation of iptables has lots of side effects. It would be easier to have container only bind an internal interface not exposed to the internet.Ref:

Disable docker from mocking with iptables:

echo "{

\"iptables\": false

}" > /etc/docker/daemon.json

(did not get correct config in /etc/default/docker)

allow forwarding to the container:

cp -p /etc/default/ufw /etc/default/ufw.bak

sed -i -e 's/DEFAULT_FORWARD_POLICY="DROP"/DEFAULT_FORWARD_POLICY="ACCEPT"/g' /etc/default/ufw

systemctl restart docker

systemctl restart ufw

allow container to go out to internet.

exact ip from ifconfig docker0

(haven't done this yet, but from inside the container was able to go out. docker-compose port maps to specific ip , but that should not matter for outbound traffic

the other thing is that the host, bofh, had a rule that allow full access (meant for cueball-bofh traffic))

iptables -t nat -A POSTROUTING ! -o docker0 -s 172.17.0.0/16 -j MASQUERADE

x-ref: https://github.com/tin6150/inet-dev-class/blob/master/tig/README.mode2.rst

File System Isolation

- File system inside container is isolated from the host

- No Disk I/O throtle, no disk quota.

- Union FS: DeviceMapper in Fedora. AUFS in Ubuntu.

Docker vs LXC

- LXC is lightweight, but heavily embeded into Gentoo Linux, not portable.

- Docker started in Ubuntu. application portability was key goal. Gentoo was providing a container for security/isolation.

- Both rely on Cgroup, union file system.

docker run

Stock httpd conainer

# run a stock container (apache httpd) on amazon linux # no changes to the content of the container docker run -p 80:80 -i -t --rm --name ApacheA -v ~/htdocs4docker:/usr/local/apache2/htdocs/ httpd docker run -p 8000:80 --name ApacheB -v "$PWD":/usr/local/apache2/htdocs/ httpd & # run a process (httpd) in a new container # each process has its own FS, network, isolated process tree. # most of the default param are defined in the IMAGE, but cli arg will overwrite the IMAGE conf. # # -p = map host port 8000 to port 80 on the container # if not specified, default maps NOTHING, # so outside, even on the host, can't get into the inside of the docker app! # -i = interactive, def=false. ^P ^Q ^Z bg can suspend and background this. cannot use with & # -t = allocate pseudo-tty, def=false # --name foo = assign a name to the container. if not specified, a random 2_words name will be assigned # --rm = remove container when exiting, def=false. # if don't delete container, it linger around. # will see them with docker ps -a. remove with docker rm ContainerName # Note that each time a container is started, the name must not match existing or stopped container. # --rm is good especially for testing, to avoid having to do lot of clean up work. # -v "$PWD":/usr/local/apache2/htdocs/ bind mounts the host's current dir into the container's apache htdocs dir. # -v or --volume=... format is host : container docker run -it httpd /bin/bash # this run (a new) apache httpd container, and start the bash shell running *inside* the container env. # /usr/local/apache2/htdocs inside this container is where the web pages are served up from (if not mapped with -v) # changes to this will persist inside this instance, till container is removed with docker rm ContainerName docker exec -it ApacheB /bin/bash # this run the bash command in an already running container called ApacheB # -it will make it interactive with tty, thus leaving one with a shell inside the container # ^D or exit will terminate this exec. the container will continue to run. it is like logout. docker exec ApacheB ps -ef # will run "ps -ef" inside the container, print output, and terminate the exec # think of "ssh remotehost ps -ef" to run command w/o interactive shell in a remote environment # docker exec depends on a running container # to start a container with a specific command is done by specifying a different entrypoint. eg docker run --entrypoint "/bin/ls" ubuntu # but there seems to be some kernel issue, -v does not work docker run -v $HOME:/myhome --entrypoint "/bin/ls /myhome" ubuntu # fails miserably (on Zorin 12/Xenial or CentOS 7)

Container of OSes in Amazon Linux

docker pull ubuntu docker run -it ubuntu /bin/bash # start a bash shell that run inside the docker process tree env # the run command by default attach stdin, stdout and stderr to the console, # so all keystrokes will pass thru (which is why hitting ^Z) does not put docker to background. # this was done on backbox # apt-get works, no aptitude (even though host does have this command) # ps -ef shows only the bash process! docker pull rhel7 docker run -it rhel7 /bin/bash # this was done on backbox # uname shows ubuntu # /etc/redhat-release exist in the FS inside the container # yum works # df -hl is very different than the ubuntu container # mount shows the same list of mounts as in the ubuntu container

CoreOS

CoreOS relies on docker to provide packages. no rpm, dpkg, yum, apt-getAll CoreOS install has two boot partition, active/standby, where OS upgrade is done on standby.

docker pull httpd docker run -p 80:80 httpd docker pull pdevine/elinks2 docker run -it --rm pdevine/elinks2 elinks http://www.yahoo.com # will start interactive browser docker run -it --rm pdevine/elinks2 elinks http://172.31.28.159 # use the host's eth0 IP to access the web server running on it

alternate entry points, run as user, etc

docker run -it --entrypoint /usr/bin/Rscript -v $HOME:/tmp/home --user=$(id -u):$(id -g) tin6150/r4eta docker run -it --entrypoint /bin/bash --user=$(id -u):$(id -g) tin6150/r4eta

Building an image using dockerfile

One way to build an image is to save/commit an existing/modified image.The other method is to build one from scratch using dockerfile

ref: https://docs.docker.com/engine/userguide/dockerimages/

mkdir myapp cd myapp vi dockerfile docker build -t tin6150/myapp:v3 . # . will search for ./dockerfile -t is for tag # optional upload to docker hub, assuming user tin6150 has been registered # the "push path" depends on the tag used to do the build, not the relative file path of where dockerfile is located. docker push tin6150/myapp docker rmi myapp... # remove an image from the lost host.dockerfile ::

FROM ubuntu:14.04 # use ubuntu as a base image to build this docker container/app MAINTAINER user <user@example.com> RUN apt-get update && apt-get install -y ruby ruby-dev # RUN gem install sinatra # always run the apt-get update and install command in the same line using &&, # or it would result in a db problem on the resulting container. # on flip side, it is kinda "bad form" needing to run update, should just base it on a newer image.

- Each RUN line adds a layer, better to chain unix commands using &&

- ADD command will copy files from the build host to the container. build is then no longer "build anywhere"

Example Docker HTTPD image + psg static web content

docker run -p 80:80 -i -t --name ApachePsg -v ~/htdocs4docker:/mnt/htdocs httpd /bin/bash

# /mnt/htdocs will be created inside the container by the startup process

# note inside this container, it has very few commands. no scp, no wget.

# run this inside the container's bash :

# cp -pR /mnt/htdocs/psg/* /usr/local/apache2/htdocs

# httpd # this starts process and return to bash

# run the following on the host (ie, from a different window)

docker commit ApachePsg # create a new image from changes done to exisiting container

docker kill ApachePsg

docker run -p 80:80 -i -t --name ApachePsg2 -v ~/htdocs4docker:/mnt/htdocs ApachePsg /bin/bash

## above didn't work.

docker start ApachePsg # re-start a container using its name

docker attach ApachePsg # attach to the container (last start with bash, so get back to a prompt)

docker export # Export the contents of a container's filesystem as a tar archive

docker save # Save an image(s) to a tar archive (streamed to STDOUT by default)

docker commit # Create a new image from a container's changes

docker commit -m "commitMsg" RunningContainerName

# but how is it don't see the new item in docker images?

# nor was i able to do docker run with the new image...

# certainly, after commit, psg content remained inside the container.

# No way to give a name to the image! and no way to rename it!

# -m commitMsg is very important for identifying the image using history

# Even after commit is done, docker ps -a don't show the new container id

# because that was saved to disk and isn't the running instance!

# so can only see the info by carefully checking with docker images -a

# Managment in Docker is said to be none existing! Kubernetes??

docker images -a # see that 8e300072616a is newest image created

docker history 8e300072616a # 8e300072616a is the IMAGENAME from above docker commit.

# this cmd will show the commitMsg

# and some info on how image was created, and the

docker run -p 80:80 -i -t --name ApachePsgII 8e300072616a /usr/local/apache2/bin/httpd

# confirm that the new image can start

docker rename # rename a container

? rename image... no way to do this?? but when upload to dockerhub, give it a new name, and pull it again...

docker login # login to docker hub. credentials will be saved as base64 enconding in ~/.docker/config.json (ie, NOT encrypted!)

docker commit -m "apache psgIII" edb6d60c97da tin6150/apache_psg3

# edb6d60c97da is a container id (from docker ps -a)

# tin6150/apache_psg3 is username/imagename

docker push tin6150/apache_psg3 # push the image to hub.docker.com

# at this point, image is uploaded to the web and ready for use by others :)

docker tag e4718e38a3b1 tin6150/apache_psg_3a:dev2 # was able to tag and push, no commit...

## but this push all images on the chain to create the current image??

## certainly pushing lot of stuff... but prev pull end up with lot of stuff too...

docker push tin6150/apache_psg_3a:dev2 # in hub.docker.com, see 81MB image.

# docker tag ref https://docs.docker.com/mac/step_six/

podman build -t tin6150/r4envids -f Dockerfile . | tee Dockerfile.monolithic.LOG

podman login docker.io

podman push tin6150/r4envids # had pre-created the repo in hub.docker.com

podman push tin6150/r4envids:newtag

## on a different machine, eg centos7, can pull the image to test it out

docker run -p 80:80 -i -t --name ApachePsg_C tin6150/apache_psg3 /bin/bash

# docker run will pull the image, and create a container to run this service.

# dropped into bash prompt, can check the htdocs dir.

# httpd will start the web server

docker run -p 80:80 -i -t --name ApachePsg_C tin6150/apache_psg_3a:dev2 httpd # when no latest tag exist, must specify which one to get

Other more advance topics

X11 apps

echo $DISPLAY # :0.0 xhost + docker run -it -e DISPLAY=$DISPLAY -v /tmp/.X11-unix:/tmp/.X11-unix tin6150/metabolic /usr/bin/xpdfSee:

Docker Data Volume

There are ways for container to mount the host's directory to its internal directory.Multiple container can mount the same host directory as well, but application need to be careful with data access and lock or it can create corruption.

https://docs.docker.com/engine/userguide/dockervolumes/

Performance

Better than VM, as no hypervisor overhead. However, best hypervisor overhead is said to be just 2% over baremetal, so beyond the VM boot up time, some claim there is little room for container to improve on. For microservice, app startup time is important thus container still offer advantage over VM.docker run --cpu-shares=n # assign a weight of how much cpu this run command get # by default all container share cpu equally. # weight are 0 to 1024. All containers weight are added and then ratio calculated based on requested weight. docker run --cpu-quota=n # wonder if they are enforced using cgroup

Dependencies

docker run will pull dependencies needed (think of yum auto download dependencies). multi-container apps... one way is to view them as different pieces running on multiple host and rely on network communication.

Linking Containers

Linking allow container to communicate with one another. vs dependencies ?? https://goldmann.pl/blog/2014/01/21/connecting-docker-containers-on-multiple-hosts/ (maybe add diagram)Multi-container app

Example of running wordpress in one container, and a MySQL DB on a different one. Ref: Article by Aleksander Kokodocker run --name wordpressdb -e MYSQL_ROOT_PASSWORD=password -e MYSQL_DATABASE=wordpress -d mysql:5.7 # starts MySQL container docker run -e WORDPRESS_DB_PASSWORD=password --name wordpress --link wordpressdb:mysql -p 0.0.0.0:80:80 -d wordpress # starts wordpress container # --link name:alias is to create a private network connection to the named container # (--link also copy ENV variables, if conflict, duplicated to HOST_ENV_abc and HOST_PORT_nnn) # if don't specify to bind to 0.0.0.0:80, wordpress default to some docker bridge IP. # use "-i -t" instead of "-d" to see web page access log in the console. # Can then detach from session using ^P ^Q (it will NOT put container in paused state) # alt, ^C on process. docker start wordpress will largely resume where it was left off # (wordpress saved most state info to disk). # (tested to work on amazon linux. centos7 didn't work, maybe SELinux... maybe cuz ran docker as root.)The above just serve as proof of concept. It is not secure. To really run a wordpress site this way, refer to info in docker hub

Also, there is a more complex setup with NGINX load balancer, Redis cache: docker wordpress (mostly done via Docker Compose)

Docker Compose

docker-compose up # read docker-compose.yml, and bring up container(s) as specified in the declarative yml file probably compete with kubernetes.

eg Both examples of wordpress setup has reference to Docker Compose.

Security

- docker create iptables in the PREROUTING level, thereby often bypassing host's FirewallD or UFW rules. see firewall#docker

- Docker Daemon typically listen to unix socket. But it could be configured to listen on network port, in which case TLS certs (with key/passphrase) is recommended. see Docker security doc

Quay.io Registry

From RedHat. charge for hosting private repo.ghcr - GitHub Container Registry

Free for Open source project. longer longevity than Docker? Not sure if it allow for larger image size. hub.docker.com won't cloud build anything larger than 20G. But seems like could build locally and push a 25.1 GB image to docker.io. push to ghcr actually failed, so not sure if will continue to use it.head -1 ~/.ssh/token | docker login ghcr.io -u tin6150 --password-stdin docker tag 869b44021e4a ghcr.io/tin6150/metabolic:4.0 docker push ghcr.io/tin6150/metabolic:4.0 # but failed, maybe due to size Error: Error copying image to the remote destination: Error writing blob: Error uploading layer to https://ghcr.io/v2/tin6150/metabolic/blobs/upload/8a4c3f7f-1628-43e6-b9aa-0655fe6c1faa?digest=sha256%3A138bf1d88427d73721eb2b23281827e551e1fe7ec42ac63bd35da9859ff8d3ab: received unexpected HTTP status: 504 Gateway Time-out docker tag b982a301de32 ghcr.io/tin6150/r4envids docker push ghcr.io/tin6150/r4envids

github package registry, older way to support container, avoid use: head -1 ~/.ssh/token | docker login https://docker.pkg.github.com -u tin6150 --password-stdin docker tag IMAGE_ID docker.pkg.github.com/OWNER/REPOSITORY/IMAGE_NAME:VERSION docker build -t docker.pkg.github.com/OWNER/REPOSITORY/IMAGE_NAME:VERSION PATH docker push docker.pkg.github.com/OWNER/REPOSITORY/IMAGE_NAME:VERSION

Podman, Docker-compose

Podman 3.0 and up said to work with docker-compose, see: RH Enable Sysadmin Using Podman and Docker Compose (article from 2021.0107) Caveats:One known caveat is that Podman has not and will not implement the Swarm function. Therefore, if your Docker Compose instance uses Swarm, it will not work with Podman.

# trying on greyhound Other than Podman and its dependencies, be sure the podman-docker and docker-compose packages are installed. (In addition to... ) sudo systemctl start podman.socket test the socket: sudo curl -H "Content-Type: application/json" --unix-socket /var/run/docker.sock http://localhost/_ping # these should work with podman sudo docker-compose up sudo podman ps sudo podman network ls sudo podman volume ls

SDK

python sdk

-e argument of docker run command is likely under the client.containers.run() environment option, need a dict.

PYTHONUNBUFFERED=1 # set to any value to tell python not to use buffered output

buffered=no # is for Rstudio, but seems to affect cli R in linux as well.

import docker

atlas = client.containers.run(

atlas_image,

volumes=atlas_docker_vols,

command=atlas_cmd,

#stdout=docker_stdout, ## this read settings from yaml

auto_remove=True, ## likely the --rm option of docker cli

stdout=True,

stderr=True,

#environment='PYTHONUNBUFFERED=1',

environment=["PYTHONUNBUFFERED=1","buffered=no"],

detach=True)

##xxdetach=False)

for log in atlas.logs(

#stream=True, stderr=True, stdout=docker_stdout):

#stream=True, stderr=True, stdout=True):

#stream=True, stderr=True, stdout=docker_stdout, timestamps=True):

stream=True, stderr=True, stdout=True, timestamps=True):

print(log)

# 4. CLEAN UP

atlas.remove()

Reference

- Docker basic from AWS

- Docker Getting Started

- Docker (Taos)slide 50 demo cgroup on memory

- redis key-value db

- nginx (engine x) load balancer

- Open Container Initiative (OCI) - Plumbing: RunC, Notary (content signing)

- man docker # docker daemon man page

- man docker run # man page for the run command of docker

- Container SlideShare

- tba

- tba

(I am still waiting for docker to actually name my container Uranus_Hertz, but maybe it is just a matter of time?)

(I am still waiting for docker to actually name my container Uranus_Hertz, but maybe it is just a matter of time?) Container Runtime

- Docker runtime engine (LXC?)

- containerd

- rkt

- singularity

Container Orchaestration

- Kubernetes

- Docker Swarm

- Rancher

- Mesos Marathon

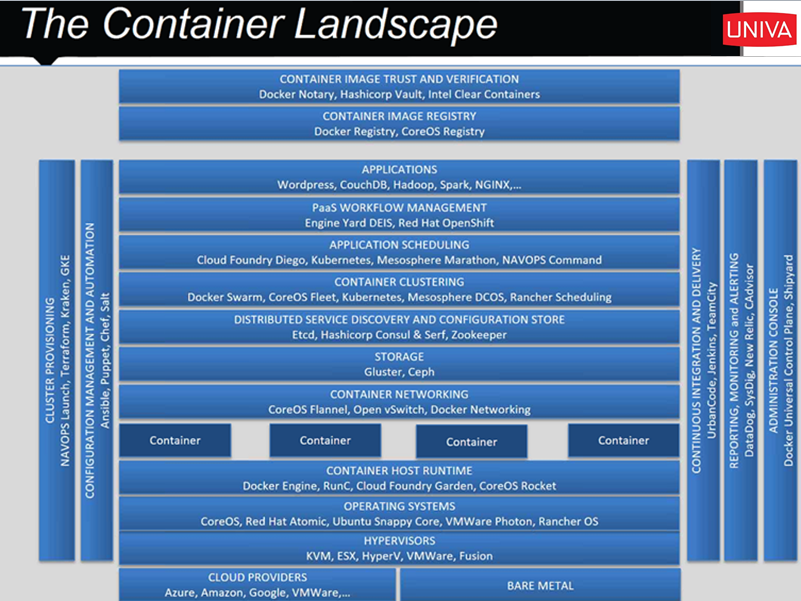

Container Landscape

Players in the container world, circa 2016. (Univa slide)

Container in HPC

Container in HPC is a quite a large topic. For a feature comparison table of Shifter vs Singularity, and a list of relevant articles, see my blogger article Docker vs Singularity vs ShifterLBNL/NERSC Shifter

- NeRSC R&D + collaboration w/ Cray, in production use at NeRSC (Edison, Cori), open source release soon. Cray may develop commercial support for this.

- Paper in SC 2015.11

- https://www.nersc.gov/news-publications/nersc-news/nersc-center-news/2015/shifter-makes-container-based-hpc-a-breeze/

- Flexibility: user-defined images (UDI) sw stack, placed as container image.

- Reproducible research: UDI can be validated and workflow will run exactly every time it is started, even if environment may have aged.

- SW stack isolation. Different users in diff realm maintain their own UDI, HPC just run them as jobs, they are completely independent of one another. Accomplished by using linux VFS namespaces to run multiple Shifter containers.

- Utilize public image repos like DockerHub, user can start application stack without sys admin priviledges.

- Not using Docker daemon, as that assume local disk (aufs, btrfs)

- native app perf, fast job startup.

- FS are re-mounted, no setuid.

- Containers are read-only to user (write to network location?) (Dockers allow changes to container after admin issue save command.)

- Shifter essentially adopt docker images, but have to redo the backend to provide docker daemon-like features executed by the HPC batch manager system.

LBNL/HPCS Singularity v 1.0

- For feature of the newer Singularity 2.2, see Docker vs Singularity vs Shifter

- VM does not address portability. Singularity address portability.

- It is like getting the portability of Docker and make it run in an HPC batch system. However, Singularity does not use any piece of Docker.

- SAPP (Singularity App) = Combination of RPM and Container.

- ldd shows what library a program need. Singularity automatically build dependency tree and add all the required dependency into container it builds.

- all dependencies, down to C libraries, are included. Thus, SAPP is very portable and reproducible.

- SAPP is kinda like a static-link binary, but its container also bundle additional scripts, config files etc that make up a workflow.

- Developer can provide SAPP of complex workflow and user won't have to worry about any dependencies!!

- file system mounted inside the container without using bind mounts.

- container run as user-space process.

- By and large have no kernel dependencies, other than IB and MPI.

- SAPP runs as user process, thus easy to get it to run by HPC scheduler. There is probably little the HPC scheduler need to run, other than treat SAPP as an app and run it. This is a HUGE diff compared to Shifter.

- OpenMPI support is already included. http://singularity.lbl.gov/docs_hpc.html

- On the high level, there are a lot of ideas similarity with Shifter. After all, both address similar concerns of wanting reproducible workflow. Implementations of the two are drastically different. see faq

- Developed by Gregory Kurtzer, of Warewulf fame et al. Ver 1 was released April 14, 2016. Ver 2 in beta now. Avail at gmkurtzer.github.io/. G.K. is from Berkeley Lab High Performance Computing Services (HPCS).

Container in UGE

- Univa promises to allow qsub to run job involving docker Containers.

- qsub will have an -xdv option to map file system of the host to process running inside container

- docker will be defined as a boolean resrouce, and requested via -l option of qsub

- Additional management and orchestration by new products: Navops Launch, Command.

- Prioritization for different containers

Search within the PSG pages:

Copyright info about this work

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike2.5 License.

Pocket Sys Admin Survival Guide: for content that I wrote, (CC)

some rights reserved.

2005,2012 Tin Ho [ tin6150 (at) gmail.com ]

Some contents are "cached" here for easy reference. Sources include man pages, vendor documents, online references, discussion groups, etc. Copyright of those are obviously those of the vendor and original authors. I am merely caching them here for quick reference and avoid broken URL problems.

Where is PSG hosted these days?

tiny.cc/dock

http://tin6150.github.io/psg/psg2.html This new home page at github

http://tiny.cc/tin6150/ New home in 2011.06.

http://tin6150.s3-website-us-west-1.amazonaws.com/psg.html (coming soon)

ftp://sn.is-a-geek.com/psg/psg.html My home "server". Up sporadically.

http://tin6150.github.io/psg/psg.html

http://www.fiu.edu/~tho01/psg/psg.html (no longer updated as of 2007-05)

http://tin6150.github.io/psg/psg2.html This new home page at github

http://tiny.cc/tin6150/ New home in 2011.06.

http://tin6150.s3-website-us-west-1.amazonaws.com/psg.html (coming soon)

ftp://sn.is-a-geek.com/psg/psg.html My home "server". Up sporadically.

http://tin6150.github.io/psg/psg.html

http://www.fiu.edu/~tho01/psg/psg.html (no longer updated as of 2007-05)