Intro to Slurm as presented in LBNL LabTech 2018 by yours truly :).

SLURM

Two page slurm cheat ssheet

alt cache

Slurm 101

SLURM = Simple Linux Utility for Resrouce Management. But it isn't so simple anymore and it is rebranded as "Slurm", like the drink in the simpsons :)

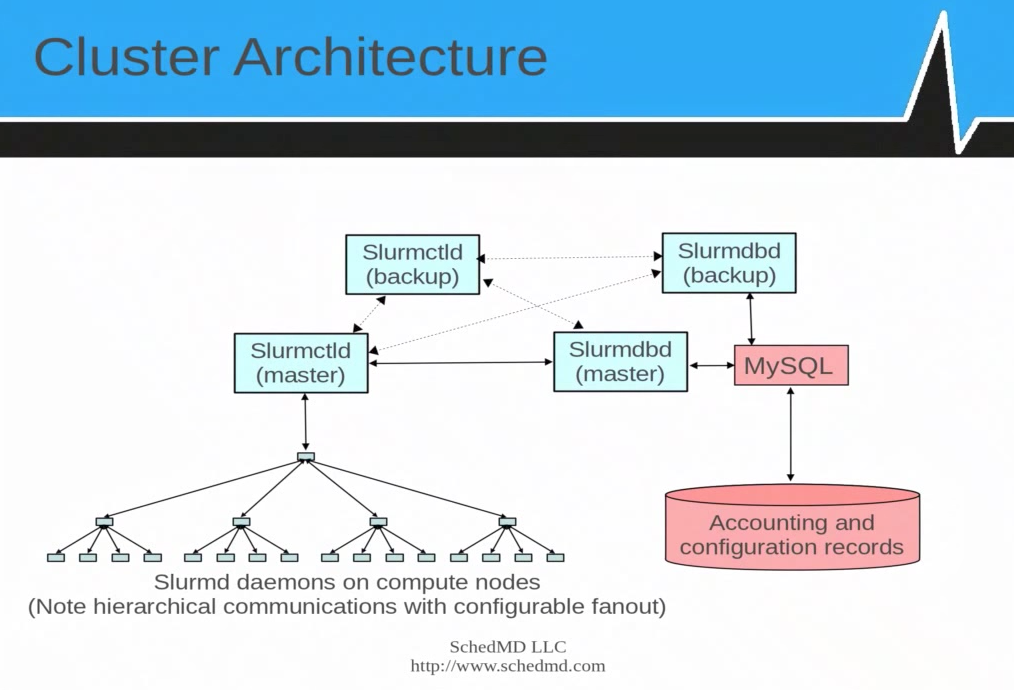

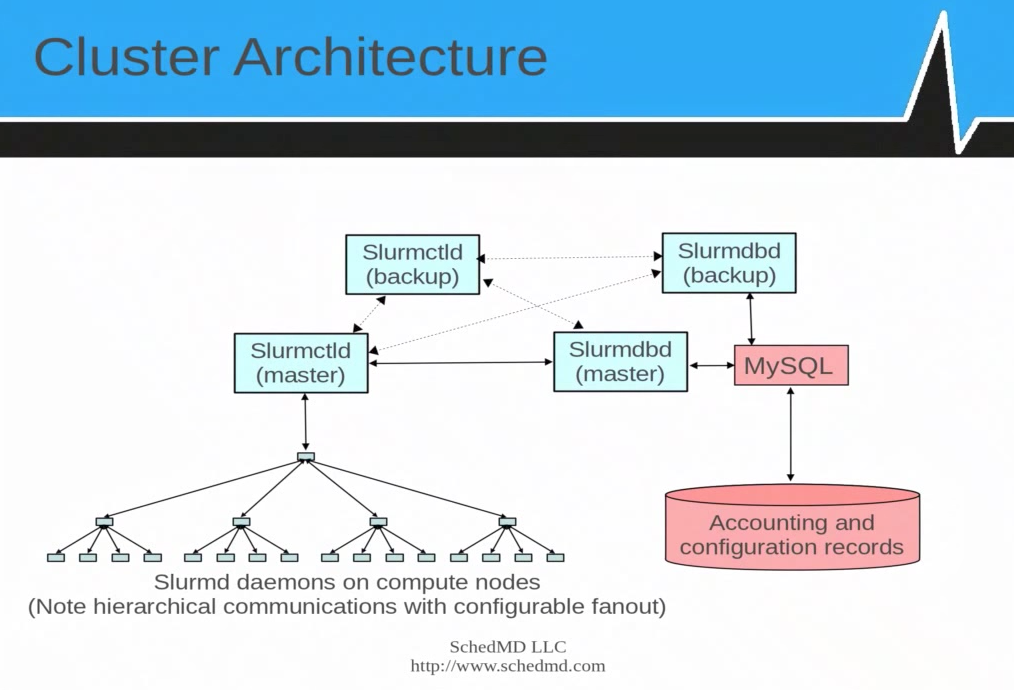

- No single point of failure. But redundant control daemon brings a lot of headaches.

- About 500k lines of C. API/Plug-ins in C, some have LUA interface.

- Open source + commercial support by SchedMD.

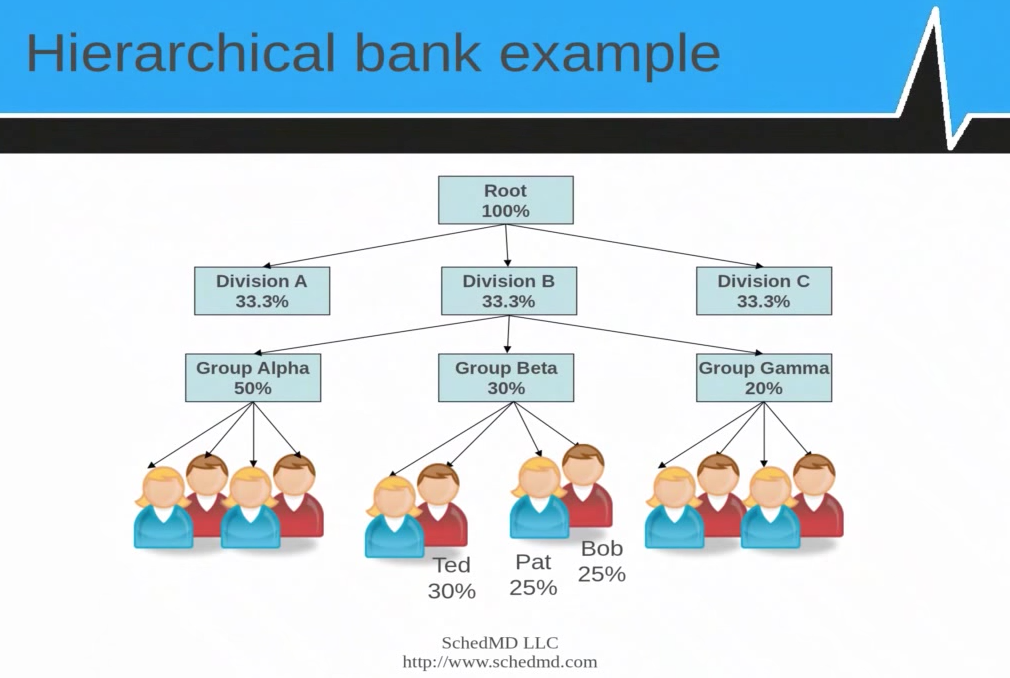

- Couple of items are Hierarchical: Node Daemons, User bank/account grouping (see diagram below).

- Associations are the basic roles that govern who can run jobs where.

- Nodes are grouped into Partitions (essentially queues in other schedulers).

- User belongs to one (or more) accounts.

- QoS (Quality of Service) govern how much of a given resource an account can use at a time.

- Job step refers to individual job of a task array.

Job Submission

sbatch myscript # submit script to run in batch mode

salloc # run interactive job, asking scheduler to allocate certain resources to it

salloc bash # get a bash shell in intertive mode with req resource

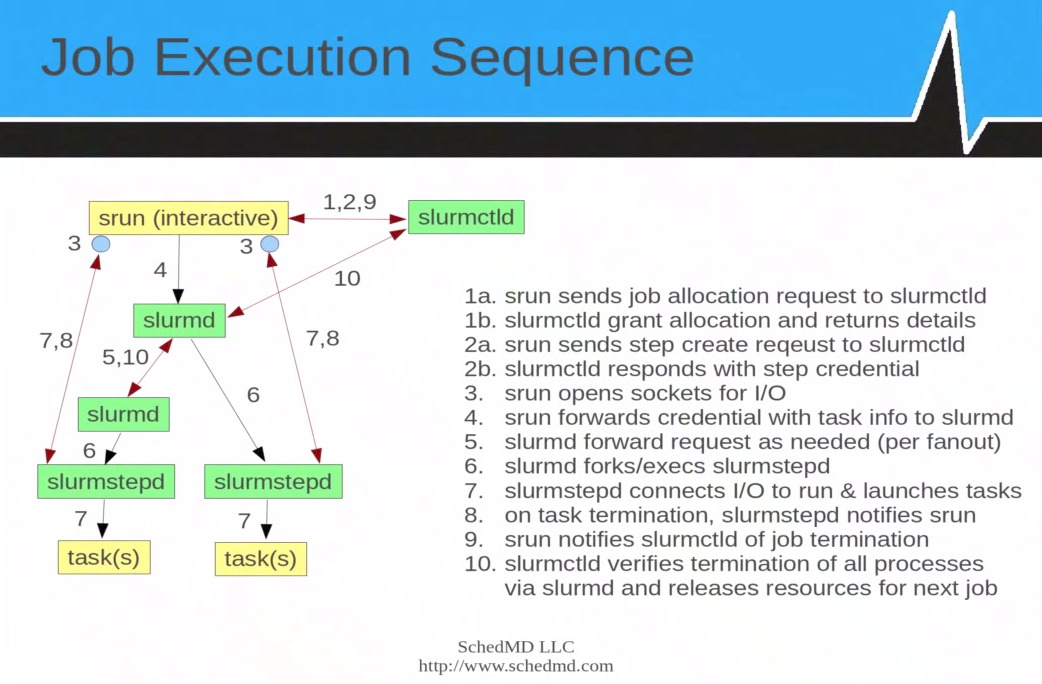

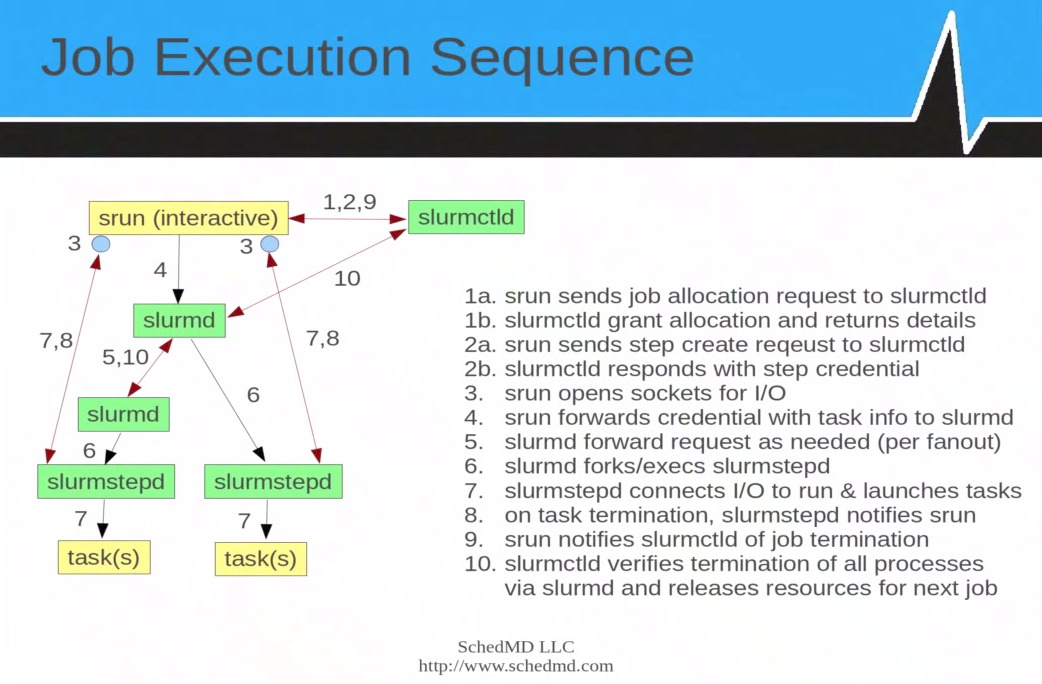

srun # Typically for MPI job, create job allocation, launch job step.

srun --pty -p lr3 -A scs --qos=lr_normal -N 1 -t 0:59:00 /bin/bash # interactive bash shell for 59 min on 1 Node.

srun --pty -p savio2_gpu -A scs --qos savio_normal -N 1 -n 1 -t 60 --gres=gpu:1 bash # requesting gpu node as gres

srun --pty -p savio3_2080ti -A scs --qos savio_normal -n 2 -t 60 hostname

sattach # connect to the stdin, stdout and stderr of a job or job step

Options common to most/all job submission commands:

sbatch/salloc/srun

--ntasks=100 # set number of tasks to 100 (-n 100)

--nodes=x --ntasks-per-node=y # less recommended way to run large MPI job

--time=10 # time limit in minutes (wall clock after job starts) (also help scheduler do backfill)

--depend=JOBID # job dependency, like qhold until a parent job has finished before starting this.

--account=NAME # which account to use for the job

--qos=NAME # what QoS to run (may affect cost, preemption, etc)

--label # prepend output with task number

--nnodes=2 # req 2 nodes for this job (-N 2)

--exclusive # have exclusive use of node when running

--multi-prog slurmjob.conf # run a job where diff task run diff prog, as specified in a config file given in the arg

# taskID starts with 0

# %t = task ID

# %o = offset within task ID range

--licenses=foo:2,bar:4 # allocate license with the job.

-J jobname

example job submission with constrains (features in slurm.conf)

srun --pty --partition=savio3_2080ti --account=scs --qos=geforce_2080ti3_normal -n 2 --gres=gpu:GTX2080TI:1 --constrain=ssd --time=00:30:00 bash

srun --pty --partition=savio3_2080ti --account=scs --qos=titan_2080ti3_normal -n 2 --gres=gpu:TITAN:1 --constrain=8rtx --time=00:30:00 bash

Example with slurm array jobs:

UMBC

though if input file are not named sequentially, may not have a easy way to match them,

and

gnu-parallel with task file as input would be more flexible, albeit a bit more complicated.

What/Where

Often time, a number of parameters are required to submit jobs.

eg,

what account I am in? what partitions are there? What QoS are there?

sacctmgr show associations -p user=tin # see what associations a specified user has been granted, -p = "parsable"

sacctmgr show assoc user=tin format=acc,part,qos # list account, partition, QoS for the specified USERNAME

sacctmgr show assoc user=tin format=qos%58,acc%16,user%12,part%18,share%5,priority%16 # share=1 just means fair share, it is a weight factor for job priority

sacctmgr show assoc user=tin -p format=acc,user,part,share,priority,qos | sed "s/|/\t/g"

scontrol show partition # list "queues"

sacctmgr show qos -p format=name # list QoS

sacctmgr show qos format=Name%24,Priority%8,Preempt%18,PreemptMode,GrpTRES%22,MinTRES%26 # savio

sacctmgr show qos format=name%26,Priority%8,Preempt%18,PreemptMode,MaxWall,MaxTRESPU,MaxSubmitPU,GrpTRES%22,MinTRES%22,UsageFactor,GraceTime,Flags%4

sacctmgr show qos format=name%26,Priority%8,Preempt%18,PreemptMode,MaxWall,GrpTRES%22,MaxTRES%22,MinTRES%26,MaxTRESPU,MaxSubmitPU

Job Control

scancel # send signal to cancel job or job steps (individual task array job)

JOBID # cancel a job (if task array, ??all job steps)

JOBID.TASKID # cancel only specific job step

--user=USERNAME --state=pending # kill all pending jobs for named user

sbcast # "broadcast": xfer file to compute nodes running the job, using hierarchical communication

# may or may not be faster than a shared FS.

sqeueue --start -j JobID # scheduler return estimate of when job would start running

# may return N/A when --time not specified for other jobs

srun_cr # run with BSD checkpoint/restart

strigger # run arbitrary script in response to system events, eg node dies, daemon stops, job reaches time limit, etc

# persistent triggers will remain after event occurs

-c ? # create trigger

--destroy

--list # list triggers ?

System Info

sinfo # status of node, queue, etc

--Node # output info in node-centric view (group similar nodes together)

-p PARTITION # info on specific partition (queue)

--long # add info on memory, tmp, reason, etc

sinfo -R -S %E # output sorted/grouped by Reason

sinfo -R -S %H # output sorted by timestamp

sinfo -R -S %n # output sorted by nodelist

alias sinfo-N='sinfo --Node --format "%N %14P %.8t %E"' # better sinfo --Node; incl idle ##slurm

# -N is node centric, ie one node per line, has to be first arg

# -p PARTNAME # can add this after aliased command instead of using grep for specific queue

alias sinfo-f='sinfo --Node --format "%N %.8t %16E %f"' # Node centric info, with slurm feature

# %f = feature eg savio3_m1536, which is 16x more ram than savio3_m96

# feature name change to ponder: 4x1080ti, 2xV100, 4x2080ti, 8xTitanRTX (do we always get Ti version?) but may not want to change savio2, maybe only add to it

alias sinfo-R='sinfo -R -S %E --format="%9u %19H %6t %N %E"' # -Sorted by rEason (oper input reason=...) ##slurm

# %E is comment/reason, unrestricted in length.

# once -R is used, it preced -N, but this output is good for sorting by symptoms

squeue # status of job and job steps, TIME means job running time so far

-s # info about job steps

-u USERNAME # job info of specified user

-t all # all state (but only recent job only, historical info in accounting rec only)

-i60 # iterate output every 60 sec

squeue -p PARTITION --sort N -l # show nodes of a partition sequentially, with long format (elapsed time and time limit)

squeue -o "%N %l" # specify output format %N = node, %l = time left

# default output: "%.18i %.9P %.8j %.8u %.2t %.10M %.6D %R"

# def for -l, --long: "%.18i %.9P %.8j %.8u %.8T %.10M %.9l %.6D %R"

# def for -s, --steps: "%.15i %.8j %.9P %.8u %.9M %N"

squeue --sort N -o "%.18i %.9P %.8j %.8u %.8T %.10M %.9l %.6D %R"

squeue --sort N -o "%R %.6D %.14P %.18i %.8j %.14u %.8T %.10M %.9l"

# %R is node name, but could be multiple, or absent not RUNNING yet.

# %j is job name

# %u is username

# %T is state info (RUNNING, PENDING, etc)

# %l = time limit

smap # topology view of system, job and job steps. (new tool that combine sinfo and squeue data)

sview # new GUI: view + update on sys, job, step, partition, reservation, topology

scontrol # admin cli: view + update on sys, job, partition, reservation

# dump all info out, not partituclarly user friendly

scontrol show reservations # see job/resource reservations

scontrol show partition # view partition/queue info

scontrol show nodes # view nodes info (as specified to slurm.conf)

scontrol show config # view config of whole system (eg when asking mailing list for help)

scontrol update PartitionName=debug MaxTime=60 # change the MaxTime limit to 60 min for the queue named "debug"

scontrol show jobid -dd 1234567 # see job detail, including end time

scontrol update jobid=1234567 TimeLimit=5-12 # change time limit of a specific job to 5.5 days (job runtime extension, but it is not adding time to existing, nor is it setting a new finish time, but change the original wall clock limit, so be sure that it is longer than previous setting, eg default of 3 days for some queue)

sacct # view accounting by individual job and job steps (more in troubleshooting below)

sacctmgr show events start=2018-01-01 node=n0270.mako0 # history of sinfo events (added by scontrol) but rEason is truncated :(

sstat -j # on currently running job/job step (more info avail than sacct which is historical)

-u USERNAME

-p PARTITIONNAME

sprio # view job PRIOrity and how factors were weighted

sshare # view fair-SHARE info in hierarchical order

sdiag # stat and scheduling info (exec time, queue length). SLURM 2.4+

sreport # usabe by cluster, partition, user, account

# present info in more condensed fashion

Env Var

SLURM will read environment variables to help it determin behavior.

CLI argument(s) will overwrite env var definitions.

See:

schedmd reference

SQUEUE_STATE=all # tell squeue cmd to display job in all state (incl COMPLETED, CANCELLED)

SBATCH_PARTITION=default_q # specify the given partition as the default queue

SLURM Job / Queue State

squeue

Node state code

State with asterisk (*) (eg idle*, draining*) means node is not reachable.

state as reported by sinfo

down* Down, * = unreachable

down? Down, ? = node reserved

drng draining - ie job still running, but no new job will land on node

drain drained - ie all jobs has been cleared

comp completing??

idle online, waiting for action

alloc allocated, ie running job

mix some cpu running job, but has more resource avail to run more jobs (non exclusive mode).

plnd ?? planned? supposed to be transient?

Node state code symbol suffix

$ reserved, maintenance

* down, unreachable

~ powered off

!,% powering down

# being powered up

@,^ rebooting

- backfilling by scheduler by higher priority job

JOB REASON CODES

Cleaning

NodeDown

JOB STATE CODES - reported by squeue

PD PENDING

CG Completing

CD Completed

TO timeout

F Failed

NF Node_FAIL

OOM OUT_OF_MEMORY

R RUNNING

ST STOPPED

TO TIMEOUT -- typically time limit exceeded, at the QoS for the partition, user or group level.

ref:

sinfo state code

SLURM Troubleshooting

- /var/log/slurm/slurmctld.log # master scheduler log

- /var/log/slurm/slurmd.log # per node local daemon log

- munge -s "test message" | ssh computenode unmunge test if munge.key works correctly

sinfo | grep n0033.savio2 # what's a node state

squeue | grep n0164.savio2 # whether the node running any job

sacctmgr show associations # list all associations. think of them as acl.

# it is the union of all entities in each association entry that govern if user X has access to resource Y (nodes operating under certain qos)

sacctmgr show associations -p user=sn # association for specified user

# -p use | to separate columns, reduce space usage.

sacctmgr show qos -p # show all avail Quality of Service entitites

sacctmgr show qos format=Name%32,Priority%8,Preempt%18,PreemptMode,UsageFactor,GrpTRES%22,MaxTRES%22,MinTRES%22

# Example qos:

Name |Priority|GraceTime|Preempt |PreemptMode|||UsageFactor|GrpTRES||||||MaxTRES||||||||||MinTRES|

genomicdata_savio3_bigmem| 1000000| 00:00:00|savio_lowprio|cluster |||1.000000 |node=4 ||||||node=4 ||||||||||cpu=1 |

genomicdata_savio3_xlmem | 1000000| 00:00:00|savio_lowprio|cluster |||1.000000 |node=1 ||||||node=1 ||||||||||cpu=1|

squeue # full detail of current job queue (all user, all jobs, on all partitions/queues)

squeue -p stanium # queue for names partition

# State: (R)unning, PenDing.

# Reason: Resource = waiting for resource eg free node

# Priority = other higher priority job are scheduled ahead of this job

sinfo -t I # list staTe Idle nodes

sinfo -t I -p stanium # Idle nodes in stanium partition

squeue --start # estimated start time of each queued job

# N/A if time cannot be estimated (eg non time bound jobs exist)

scontrol show reservation # show reservations

# reservation are typically maintenance window placed by admins

# but sys admin can also reserve to let user then run certain jobs in the reservation window.

# jobs will not start in such reservation window

# running job will NOT be killed by slurm if run into the reservation

# listed user of the reservation can submit job that is flagged to use the reservation

scontrol create reservation starttim=2018-01-02T16:15 duration=60 user=tin flags=maint,IGNORE_JOBS nodes=n0142.cf1,n0143.cf1 reservation=rn_666

scontrol create reservation starttim=2018-01-02T16:15 duration=10-0:0:0 user=tin flags=maint nodes=n0142.cf1,n0143.cf1 reservation=rn_66r76

scontrol create reservation start=2018-01-10T12:25 end=2018-01-13 user=tin,meli flags=maint nodes=n0018.cf1,n0019.cf1 reservation=resv777

# create reservation, admin priv may be req

# duration is number of minutes

# duration number of 10-0:0 is 10 days, 0 hours, 0 min. can be abbreviated to 10-0 or even 10-

# reservation=rn_666 is optional param to give reservation a name,

# if not specified, slurm will generate a name for it

# nodes=lx[00-19] to specify a range should be doable, but so far have not been able to n00[00-19].cf1. slurm too old?

# can have overlapping reservation (eg same set of nodes reserved with different name across time that have overlap

# presumably then scheduler accept job as per reservation, then schedule as a resource sharing exercise.

scontrol update reservation=rn_666 EndTime=2020-02-03T11:30

# change end time of reservation

scontrol delete reservation=rn_666

sbatch --reservation=rn_666 ... # submit a job run under reservation

Job & Scheduling

sacct # view accounting by individual job and job steps

sacct -j 11200077 # info about specific job

sacct -j 11200077 --long -p # long view, pipe seprated columns

sacct --helpformat # list fields that can be displayed

# --long and --format are now mutually exclusive

# PD = PENDING, see what resources has been requested

sacct -u USERNAME -s PD -p --format jobid,jobname,acc,qos,state,Timelimit,ReqCPUS,ReqNodes,ReqMem,ReqGRES,ReqTres

# timelimit will give idea how long job will run

sacct -s running -r QNAME --format jobid,timelimit,start,elapsed,state,user,acc,jobname

# when will a job start

sacct -u USERNAME --partition QNAME --format jobid,jobname,acc,qos,state,DerivedExitCode,Eligible,Start,Elapsed,Timelimit

# NNodes = Allocated or Requested Nodes, depending on job state

sacct -u USERNAME -r QNAME -p --format jobid,jobname,acc,qos,state,AllocNodes,AllocTRES,Elapsed,Timelimit,NNodes

# no data on these max ...

sacct -X -r QNAME -o jobid,User,Acc,qos,state,MaxDiskWriteNode,MaxDiskReadNode,MaxPagesNode,MaxDiskWriteTask,MaxVMSize,MaxRSS

# MaxRSS will be collected when slurm.conf has clause 'JobAcctGatherType=jobacct_gather/cgroup'

# ref: https://slurm.schedmd.com/cgroups.html

# seff script likely depend on this as well

export SACCT_FORMAT="JobID%20,JobName,User,Partition,NodeList,Elapsed,State,ExitCode,MaxRSS,AllocTRES%32,MaxVMSize"

sacct -j $JOBID # would report max memory usage under MaxRSS, when configured

# what node a specific job ran on:

sacct -o job,user,jobname,NodeList,AllocNode,AllocTRES,DerivedExitCode,state,CPUTime,Elapsed -j 10660573,10666276,10666277,10666278

# example of querying jobs ran in a certain time frame, in a specific partition (-r or --partition= )

sacct -p -X -a -r savio3_gpu -o job,start,end,partition,user,account,jobname,AllocNode,AllocTRES,DerivedExitCode,state --start=2021-12-28T23:59:59 --end=2023-01-01T23:59:59

# find jobs on a specific node.

# -N == --nodelist man page says can take node range, but n0[258-261] didn't work

sacct -p -a --nodelist=n0176.savio3 -o job,start,end,partition,user,account,jobname,AllocNode,AllocTRES,DerivedExitCode,state --start=2022-10-11T23:59:59 --end=2023-01-31T23:59:59

# find jobs on a specific node with some sort of fail state

sacct -p -a -s F,NF,CA,S,PR,TO,BF --nodelist=n0176.savio3 -o job,start,end,partition,user,account,jobname,AllocNode,AllocTRES,DerivedExitCode,state --start=2022-10-11T23:59:59 --end=2023-01-31T23:59:59

# all users, failure states, in a certain time frame (hung job not listed here; end time required or get no output):

sacct -a -X -s F,NF -r lr5 -S 2019-12-16 -E 2019-12-18

# Node Failure, job would have died (-a = all users, may req root):

sacct -a -X -s NF -r lr5 -o job,start,end,partition,user,jobname,AllocNode,AllocTRES,DerivedExitCode,state,CPUTime,Elapsed -S 2017-12-20 -E 2018-01-01

# many problem states (but may not be comprehensive), for all users

sacct -a -X -s F,NF,CA,S,PR,TO,BF -r lr5 -o job,start,end,partition,user,account,jobname,AllocNode,AllocTRES,NNodes,DerivedExitCode,state -S 2017-12-25 -E 2017-12-31

# get an idea of failed/timeout jobs and nodes they ran on

# SystemComment,Comment are usually blank

# Reason no longer avail?

# but may not be the last job that ran on the node, other jobs could have subsequently ran on the node...

# specifying End time required even when not asked for it to be in output list (else get empty list)

sacct -S 2019-12-21 -E 2020-01-01 -a -X -s F,NF,TO -p -o state,job,start,Elapsed,ExitCode,jobname,user,AllocNode,NodeList,SystemComment,Comment | sed 's/|/\t/g' | less

# %n expand width of specific field. submit time is when slurm create all the job log entries

sacct -j 7441362 -S 2021-02-01 -E 2021-02-17 --format submit,start,end,Elapsed,jobid%18,state%16,ExitCode,user,account,partition,qos%15,timelimit,node%35

-a = all

-j or -u would limit to job id or user

-X = dont include subjobs (default would include them)

slurm exit state are known to be all over the place and may not represent true reason why a job ended.

# what job ran on a given node

?? sacct -N n0[000-300].lr4

?? get empty list :(

Slurm Admin stuff

Managing node state

sinfo -R # see what nodes are down

scontrol update node=n0001.savio1,n0002.savio2 state=down reason="bad ib" # manually down a node

scontrol update node=n0001.savio1,n0002.savio2 state=resume # revive a node

Managing user association

sacctmgr add = sacctmgr create

sacctmgr list = sacctmgr show

# "create association" is

# really updating user entry, adding parition it can use, qos, etc

sacctmgr add user tin Account=scs Partition=alice0 qos=condo_gpu3_normal,savio_lowprio # if no qos is specified, some default will be created? dont tend to have desirable result

sacctmgr delete user tin Account=scs Partition=savio3,savio3_bigmem

sacctmgr add user tin Account=scs Partition=savio3,savio3_bigmem qos=normal,savio_debug,savio_lowprio,savio_normal

for User in "scoggins kmuriki wfeinstein"; do

sacctmgr add user $User Account=scs Partition=savio3,savio3_bigmem qos=normal,savio_debug,savio_lowprio,savio_normal

done

# if need to do changes, modify user dont seems to be very fine tuned.

# maybe better off removing the very specific association and recreate it

# but be careful to specify all param of the delete least it delete more assoc entries than desired.

sudo sacctmgr delete user where user=nat_ford account=co_dw partition=savio3_gpu

sudo sacctmgr add user nat_ford account=co_dw partition=savio3_gpu QOS=dw_gpu3_normal,savio_lowprio DefaultQos=dw_gpu3_normal

Slurm QoS config

use sacctmgr command, not editing slurm.conf

Tracking RAM usage in addition to CPU (htc partition with per-core scheduling).

sacctmgr create qos name=tintest_htc4_normal priority=10 preempt=savio_lowprio preemptmode=cluster UsageFactor=1.000000 GrpTRES='cpu=224,Mem=2060480' MinTRES='cpu=1'

# change exising QoS:

sacctmgr modify qos where name=tintest_htc4_normal set priority=1000000 preempt=savio_lowprio preemptmode=cluster UsageFactor=1.000000 GrpTRES='cpu=224,Mem=2060480' MinTRES='cpu=1'

sacctmgr remove qos where name=tintest_htc4_normal

the Mem= should have value that is multiple of nodes * RealMemory clause in slurm.conf. Value in MB.

to remove any specific TRES from having a Min req, set its value to -1. eg:

sacctmgr modify qos where name=gpu3_normal set MinTRES='cpu=-1'

Tracking GPU (GRES) is a bit tickier, especially when there are multiple types:

slurm.conf:

change

AccountingStorageTRES=Gres/gpu

to

AccountingStorageTRES=Gres/gpu,license/2080,license/3080,license/rtx,license/v100

then add to qos so select user can use such gpu "license"

sacctmgr add qos savio_lowprio

sacctmgr add qos geforce_2080ti2_normal Preempt=savio_lowprio GrpTRES="cpu=32,gres/gpu:GTX2080TI=16" MinTRES="cpu=2,gres/gpu=1" Priority=1000000 # did not enforce job land on gtx2080ti

sacctmgr add qos geforce_2080ti2_normal Preempt=savio_lowprio GrpTRES="cpu=32,gres/gpu:GTX2080TI=16" MinTRES="cpu=2,gres/gpu:gtx2080ti=1" Priority=1000000 # seems to enforce

sacctmgr add qos titan_2080ti3_normal Preempt=savio_lowprio GrpTRES="cpu=32,gres/gpu:TITAN=16" MinTRES="cpu=2,gres/gpu:titan=1" Priority=1000000 # seems to enforce, but must specify this MinTRES

sacctmgr modify qos titan_2080ti3_normal set GrpTRES="cpu=32,gres/gpu:TITAN=16" MinTRES="cpu=2,gres/gpu:titan=1" Priority=1000000 # seems to enforce, but must specify this MinTRES

sacctmgr add qos titanB_2080ti3_normal Preempt=savio_lowprio GrpTRES="cpu=32,gres/gpu:TITAN=16" MinTRES="cpu=2" Priority=1000000 # no MinTRES for gres

sacctmgr add qos titanC_2080ti3_normal Preempt=savio_lowprio GrpTRES="cpu=32,gres/gpu:TITAN=16" Priority=1000000 # no MinTRES

sacctmgr modify user tin set qos+=titanC_2080ti3_normal

test with (repeat to see if land elsewhere, may have to create congestion):

srun --pty --partition=savio3_2080ti --account=scs --qos=geforce_2080ti3_normal -n 2 --gres=gpu:GTX2080TI:1 --time=00:30:00 bash

srun --pty --partition=savio3_2080ti --account=scs --qos=titan_2080ti3_normal -n 2 --gres=gpu:TITAN:1 --time=00:30:00 bash

but if don't specify subtype, the job will get stuck as PENDING QOSMinGRES

per https://slurm.schedmd.com/resource_limits.html

bottom section on "Specific Limits Over Gres",

slurm design limitation can't enforce subtypes are used. A lua plugin script could help.

But then that create forced fragmentation and won't let user run on varying gpu types.

An alternative is to use license to give user preferential access...

sacctmgr add qos lic_titan_normal Preempt=savio_lowprio GrpTRES="cpu=192,gres/gpu:TITAN=96,license/titan=96" MinTRES="cpu=2,gres/gpu=1,license/titan=1" Priority=1000000

sacctmgr add qos lic_titan_normal Preempt=savio_lowprio GrpTRES="cpu=192,gres/gpu:TITAN=96,license/titan=96" MinTRES="cpu=2,license/titan=1" Priority=1000000

sacctmgr add qos lic_titan_normal Preempt=savio_lowprio GrpTRES="cpu=192,license/titan=96" MinTRES="cpu2,gres/gpu=1" Priority=1000000

sacctmgr add qos lic_naomi_normal Preempt=savio_lowprio GrpTRES="cpu=8,gres/gpu:3080=8,license/3080=8" MinTRES="cpu=1,license/3080=1" Priority=1000000

hmm... the partition need to be updated to say they require the license TRES...

Other places to restrict user access...

slurm.conf PartitionName def, has a AllowGroups, AllowAccounts, AllowQos that govern who can use this partition.

Partition Qos.

(similar thing may exist for Node defintion, or is that only for "FrontEnd" nodes? doc is not clear)

sacctmgr show tres

MPI job in slurm

See

schedmd mpi_guide.html

Some use mpirun, other use SLURM env var to see what resource are allocated to the job.

Slurm Daemons

slurmctld # cluster daemon, one per cluster., optional backup daemon for HA

# manage resource and job queue

slurmd # node daemon, one per compute node

# launch and manage task

# optionally, the node daemon can use hierarchical tree (multi-levels), where

# leaf nodes does not connect directly to slurmctld, for better scalability.

slurmd -C # print out RealMemory, cpu/core config, etc needed for slurm.conf

slurm*d -c # clear previous state, purge all job, step, partition state

-D # NOT daemon mode, logs written to console

-v # verbose

-vvv # very very verbose

??

-p debug # partition debug

slurmd -C # print node config (number of cpu, mem, disk space) and exit

# output can be fed into slurm config file

slurmstepd # slurm daemon to shepherd a job step

# when job step finishes, this process terminates

slurmDBD # slurm db daemon, interface with slurm admin tools and MySQL.

mysqld # db hosting accounting and limit info, jobs, etc.

Add slurm/install_linux/bin to PATH.

For testing, open several window and run the daemons with -Dcvv arguments. Each addtional -v would about double the log messages.

SLURM DB

DB powering slurm settings is quite complex, but very powerful. Also keep user accounting info.

sacctmgr # add/delete clusters, account, user

# get/set resource limit, fair-share, max job count, max job size, QoS, etc

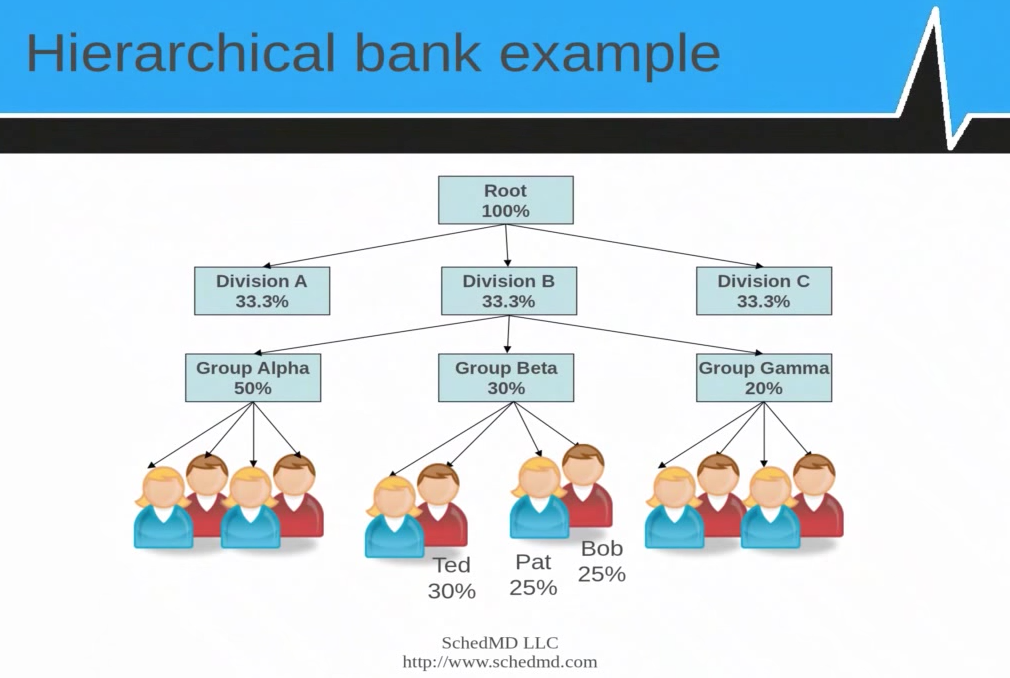

# based on hierarchical banks

# Many slurm db update done via this cmd

# updates pushed out live to slurmd (or slurmctld?)

# hierarchical banks: Division, each with diff groups, which in term has its own set of users.

Root = ? whole site, supercluster spanning all partitions.

Division = Partitions? (eg lr5, dirac1, cf1, etc) QOS??

Group = association level. eg Group Beta gets GrpCPUs, GrpJobs, GrpMemory.

User = specific user account.

They mention Hierarchical, does this means associations can be nested? Never seen example of that, maybe not.

Slurm Hierarchical bank example from SUG 2012 (page 7, 13)

The order in which limits are enforeced is:

- Partition QOS limit

- Job QOS limit

- User association

- Account association(s), ascending the hierarchy

- Root/Cluster association

- Partition limit

ref:

ceci-hpc

Admin Config

- Install RPM to all nodes

- Must configure node grouping in partition

- Most other config param has usable defaults

- Web GUI tool in doc/html/configurator.html

- Use Google MUNGE for authentication. Each node need to have MUNGE auth key configured, need a munge daemon running on each node.

- testsuite/expect has ~300 test programs to validate installation. edit globals.local to select/config tests.

slurm/install_linux/etc/slurm.conf has default template that is almost usable.

DB and accounting not configured in this default template

Need to change 2 user name and HOSTNAME. User is typically root, but in test, could use personal account.

see slurm.conf man page for details

ControlMachine # use output from hostname -s

NodeName # in node spec section

Nodes # in partition spec section

SlurmUser # slurmctld user. use output from id -un

slurmdUser # slurmd user.

...

- run scontrol reconfig for slurmctld to read new config (eg adding new partitions). But if adding nodes, need to restart slurmctld, reconfig will just kill the daemon.

- There is NO need to run scontrol create partition ... as a command. All partition info is generated from definition in the slurm.conf file. Just remember to run reconfig cmd as above.

- gres.conf needs to be configured for GPU. use nvidia-smi topo -m to find out GPU:CPU binding

Can try this for rolling reboot:

scontrol reboot asap nextstate=resume reason=“reboot to upgrade node” n0052.hpc0,n0064.hpc0,n0065.hpc0,n0066.hpc0,n0067.hpc0,n0070.hpc0,n0071.hpc0,n0074.hpc0,n0076.hpc0,n0081.hpc0,n0083.hpc0,n0084.hpc0,n0085.hpc0,n0087.hpc0

Ref:

Large cluster admin guide

cgroup & slurm

grep -r [0-9] /cgroup/cpuset/slurm

/cgroup/cpuset/slurm/uid_43143/job_13272521/step_batch/cpuset.mem_hardwall:0

/cgroup/cpuset/slurm/uid_43143/job_13272521/cpuset.mem_hardwall:0

/cgroup/cpuset/slurm/uid_43143/cpuset.mem_hardwall:0

/cgroup/cpuset/slurm/cpuset.mem_hardwall:0

grep [0-9] /cgroup/memory/slurm/*

/cgroup/memory/slurm/memory.limit_in_bytes:9223372036854771712

/cgroup/memory/slurm/memory.max_usage_in_bytes:2097704960 # 2G

/cgroup/memory/slurm/uid_43143/memory.max_usage_in_bytes:1216872448 # 1.1G

/cgroup/memory/slurm/uid_43143/job_13272521/memory.max_usage_in_bytes:1216872448

# very often

# mount -t /cgroup ... /sys/fs/cgroup

slurm process are echo into cgroup task file. eg:

tin 15537 1 0 15:05 ? 00:00:07 /global/software/sl-7.x86_64/modules/langs/java/1.8.0_121/bin/java -cp /global/scratch/tin/spark/spark-test.13272521/conf/:/global/software/sl-7.x86_64/modules/java/1.8.0_121/spark/2.1.0/jars/* -Xmx1g org.apache.spark.deploy.master.Master --host n0093.lr3 --port 7077 --webui-port 8080

grep -r 15537 *

cpuset/slurm/uid_43143/job_13272521/step_batch/cgroup.procs:15537

cpuset/slurm/uid_43143/job_13272521/step_batch/tasks:15537

memory/slurm/uid_43143/job_13272521/step_batch/cgroup.procs:15537

memory/slurm/uid_43143/job_13272521/step_batch/tasks:15537

freezer/slurm/uid_43143/job_13272521/step_batch/cgroup.procs:15537

freezer/slurm/uid_43143/job_13272521/step_batch/tasks:15537

slurm Assistant

.bashrc functions and alias

alias sevents="sacctmgr show events start=2018-01-01T00:00" # node=n0270.mako0 # history of sinfo events (added by scontrol)

alias assoc="sacctmgr show associations -p"

SLURM Ref

SLURM TRES Ref

SGE/UGE

SGE = Sun Grid Engine. Son of a Grid Engine

OGE = Open Grid Engine. And NOT Oracle GE !! They eventually sold all GE stuff to Univa anyway.

UGE = Univa Grid Engine.

GE = Grid Engine.

User Howto

Find out what queue is avail:

qconf -sql

Submit a job to a specific queue:

qsub sge-script.sh -q 10GBe.q # eg run only in a queue named for nodes with 10 gigE NIC

qlogin -q interactive.q # often an interactive queue may have more relaxed load avg req than same host on default.q or all.q

# but limited slots to avoid heavy processing in them

# yet granting a prompt for lightweight testing env use even when cluster is fully busy

qsub -R y -pe smp 8 ./sge-script.sh # -R y : Reservation yes... avoid starvation in busy cluster

# -pe smp 8 : req run with a Physical Env of 8 core (smp)

qrsh

### examples of qsub with request for extra resources ###

# Find out pe env, eg smp, mpi, orte (openmpi, most widely used mpi)

qconf -spl

# Describe detail of a pe env:

qconf -sp mpi

qconf -sp smp

# Submit a job to a queue with certain cores numbers

qsub sge-script.sh -pe smp 8 # this req 8 core from machine providing smp parallel env.

qsub sge-script.sh -q default.q@compute-4-1 # submit to a specific, named node/host. also see -l h=...

# an example that takes lot of parameters

# -l h_rt specifies hard limit stop for the job (for scheduler to know what's ceiling time of run job)

# -l m_mem_free specifies free memory on node where job can be run.

# seems to be mem per core, so if specify 8GB and -pe smp 8, then it would need a systemwith 64GB free RAM

# -pe smp 8 specify to use the symetric multiprocessing processing Parallel Environment, 8 cores

# -binding linear:8 is to allow multiple threads of the process to use multiple cores (some uge or cgroup config seems to default bind to single core even when pe smp 8 is specified.

# incidentally, load average counts runnable jobs. so even if 6 threads are bound to a single core,

# load avg maybe at 6 but most other core sits idle as visible by htop.

qsbu -M me@example.com -m abe -cwd -V -r y -b y -l h_rt=86400,m_mem_free=6G -pe smp 8 -binding linear:8 -q default.q -N jobTitle binaryCmd -a -b -c input.file

# Find out what host group are avail, eg host maybe grouped by cpu cores , memory amount.

qconf -shgrpl

# Find out which specific nodes are in a given host group:

qconf -shgrp @hex

qsub sge-script.sh -q default.q@@hex # @@groupname submits to a group, and the group can be defined to be number of cores. not recommended.

# Change the queue a job is in, thereby altering where subsequent job is run (eg in array job)

qalter jobid -q default.q@@hex

Basic User Commands

qsub sge-script.sh # submit job (must be a script)

# std out and err will be captured to files named after JOBid.

# default dir is $HOME, unless -cwd is used.

qsub -cwd -o ./sge.out -e ./sge.err myscript.sh

# -cwd = use current working dir (eg when $HOME not usable on some node)

# -o and -e will append to files if they already exist.

qsub -S /bin/bash # tell SGE/qsub to parse the script file using specifed SHELL

-q queue-name # submit the job to the named queue.

# eg default@@10gb would seelct default queue, with 10gb (complex) resource available

-r y # resume job (yes/no). Typically when a job exit with non-zero exit code, rerun the job.

-R y # use Reservation. useful in busy cluster and req > 1 core.

# hog system till all cores become avail.

# less likely to get starvation, but less efficient.

-N job-name # specify a name for the job, visible in qstat, output filename, etc. not too important.

-pe smp 4 # request 4 cpu cores for the job

-binding linear:4 # when core binding is defaulted to on, this allow smp process to be able to use the desired number of core

-l ... # specify resources shown in qconf -sc

-l h_rt=3600 # hard time limit of 3600 seconds (after that process get a kill -9)

-l m_mem_free=4GB # ask for node with 4GB of free memory.

# if also req smp, memory req is per cpu core

qsub -t 1-5 # create array job with task id ranging from 1 to 5.

# note: all parameters of qsub can be placed in script

# eg, place #$ -t 1-5 in script rather than specify it in qsub command line

qstat

-j # show overall scheduler info

-j JOBID # status of a job. There is a brief entry about possible errors.

-u USERNAME # list of all pending jobs for the specified user

-u \* # list all jobs from all users

-ext # extended overview list, show cpu/mem usage

-g c # queues and their load summary

-pri # priority details of queues

-urg # urgency details

-explain A # eshow reason for Alarm (also E(rror), c(onfig), a(larm) state)

-s r # list running jobs

-s p # list pending jobs

Reduce the priority of a job:

qalter JOBID -p -500

# -ve values is DEcrease priority, lowest is -1024

# +ve is INcrease priority, only admin can do this. max is 1024

# It really just affect pending jobs (eg state in qw). won't preempt running jobs.

qmod -u USERNAME -p -100

# DEcrease job priority of all jobs for the specified user

# (those that have been qsub, not future jobs)

qdel JOBID # remove the specified job

qdel -f JOBID # forcefully delete a job, for those that sometime get stuck in "dr" state and qdel returns "job already in deletion"

# would likely need to go to node hosting job and remove $execd_spool_dir/.../active_jobs/JOBID

# else may see lot of complains about zombie job in qmaster message file

Job STATE abbreviations

They are in output of qstat

E = Error

qw = queued waiting

r = running

dr = running, died (eg, host no longer responding)

s =

t = terminating? jobs that exceeded threashold of h_rt, but somehow sge_execd had problem contecting with scheduler, had resulted in this.

ts = ??

h = hold (eg qhold, or job depency tree to hold)

qstat -f shows host info. last column (6) is state, and when in problem, has things like au or E on it.

au state means alarm, unreachable. typically host is transiently down or SGE_execd is not running.

clearable automatically when sge can talk to the node again.

E is hard Error state, and even with reboot, will not go away.

qmod -cq default.q@HOSTNAME # clear Error state, do this after sys admin knows problem has been addressed.

qmod -e default.q@HOSTNAME # enable node, ie, remove the disabled state. should go away automatically if reboot is clean.

Here is a quick way to list hosts in au and/or E state:

qstat -f | grep au$ # will get most of the nodes with alarm state, but misses adu, auE...

qstat -f | awk '$6 ~ /[a-zA-Z]/ {print $0}' # some host have error state in column 6, display only such host

qstat -f | awk '$6 ~ /[a-zA-Z]/ && $1 ~ /default.q@compute/ {print $0}' # add an additional test for nodes in a specific queue

alias qchk="qstat -f | awk '\$6 ~ /[a-zA-Z]/ {print \$0}'" # this escape seq works for alias

alias qchk="qstat -f | awk '\$6 ~ /[a-zA-Z]/ && \$1 ~ /default.q@/ {print \$0}'" # this works too

(o state for host: when hosts is removed from @allhosts and no longer allocatable in any queue)

Basic+ User Commands

qmon # GUI mon

qsub -q default.q@compute-4-1 $SGE_ROOT/examples/jobs/sleeper.sh 120

# run job on a specific host/node, using SGE example script that sleeps for 120 sec.

qsub -l h=compute-5-16 $SGE_ROOT/examples/jobs/sleeper.sh 120

# run job on a specific host/node, alt format

qsub -l h=compute-[567]-* $SGE_ROOT/examples/jobs/sleeper.sh 120

# run on a set of node. Not truly recommended, using some resource or hostgroup would be better.

qconf

-sc # show complex eg license, load_avg

-ss # see which machine is listed as submit host

-sel # show execution hosts

-sql # see list of queues (but more useful to do qstat -g c)

qhost # see list of hosts (those that can run jobs)

# their arch, state, etc info also

-F # Full details

qhost -F -h compute-7-1 # Full detail of a specific node, include topology, slot limit, etc.

qstat -f

qstat -f # show host state (contrast with qhost)

# column 6 contain state info. Abbreviation used (not readily in man page):

# upper case = perpanent error state

a Load threshold alarm

o Orphaned

A Suspend threshold alarm

C Suspended by calendar

D Disabled by calendar

c Configuration ambiguous

d Disabled

s Suspended

u Unknown

E Error

qstat -f | grep d$ # disabled node, though some time appear as adu

qstat -f | awk '$6~/[cdsuE]/ && $3!~/^[0]/'

# node with problematic state and job running (indicated in column 3)

Sample submit script

#!/bin/bash

#$ -S /bin/bash

#$ -cwd

#$ -N sge-job-name

#$ -e ./testdir/sge.err.txt

#$ -m beas

#$ -M tin@grumpyxmas.com

### #$ -q short_jobs.q

### -m define when email is send: (b)egin, (e)nd, (a)bort, (s)uspend

### -M tin@grumpyxmas.com work after specifiying -m, (w/o -m don't send mail at all)

### if don't specify -M, then email will be send to username@mycluster.local

### such address will work if mailer supports it

### (eg postfix's recipeint-canonical has mapping for

### @mycluster.local @mycompany.com

## bash commands for SGE script:

echo "hostname is:"

hostname

This is a good ref for sge task array job:

http://talby.rcs.manchester.ac.uk/~ri/_linux_and_hpc_lib/sge_array.html

#!/bin/bash

#$ -t 1-100

# -t will create a task array, in this eg, element 1 to 100, inclusive

# The following example extract one line out of a text file, line numbered according to SGE task array element number

# and pass that single line as input to a program to process it:

INFILE=`awk "NR==$SGE_TASK_ID" my_file_list.text`

# instead of awk, can use sed: sed -n "${SGE_TASK_ID}p" my_file_list.text

./myprog < $INFILE

#

# these variables are usable inside the task array job:

# $SGE_TASK_ID

# $SGE_TASK_FIRST

# $SGE_TASK_LAST

# $SGE_TASK_STEPSIZE, eg in 1-100:20, which produces 5 qsubs with 20 elements each

#

# JOB_ID

# JOB_NAME

# SGE_STDOUT_PATH

#

For other variables available inside an SGE script, see the

qsub man page

#!/bin/bash

#$ -V # inherite user's shell env variable (eg PATH, LD_LIBRARY_PATH, etc)

#$ -j y # yes to combine std out and std err

Admin Commands

qalter ... # modify a job

qalter -clear ... # reset all elements of job to initial defaul status...

qmod -cj JOBID # clear an error that is holding a job from progressing

qhold JOBID # place a job on hold (aka qalter -hold_jid JOBID)

# for qw job, hold prevents it from becomming to r state

# for running job, probably equiv of kill -suspend to the process. free up cpu, but not memory or licenses.

qrls -hn JOBID # release a job that is on hold (ie resume)

qacct -j JOBID # accounting info for jobs

qacct -j # see all jobs that SGE processed, all historical data

qacct -o USERNAME # see summary of CPU time user's job has taken

qrsh -l mem_total=32G -l arch=x86 -q interactive # obtain an interative shell on a node of specified char

qconf # SGE config

-s... # show subcommand set

-a... # add subcommand set

-d... # delete

-m... # modify

qconf -sq [QueueName] # show how the queue is defined (s_rt, h_rt are soft/hard time limit on how long jobs can run).

qconf -sc # show complexes

qconf -spl # show pe

qconf -sel # list nodes in the grid

qconf -se HOSTNAME # see node detail

qconf -sconf # SGE conf

qconf -ssconf # scheduler conf

qconf -shgrpl # list all groups

qconf -shgrp @allhosts # list nodes in the named group "@allhosts"

-shgrp_tree grp # list nodes one per line

qconf -su site-local # see who is in the group "site-local"

qconf -au site-local sn # add user sn to the group called "site-local"

qconf -sq highpri.q # see characteristics of the high priority queue, eg which group alloed to submit job to it.

# hipri adds urgency of 1000

# express pri adds urgency of 2000

# each urgency of 1000 adds a 0.1 to the priority value shown in qstat.

# default job is 0.5, hipri job would be 0.6

qconf -spl # show PE env

qconf -sconf # show global config

qconf -ssconf # show scheduler config (eg load sharing weight, etc)

Enable fair sharing (default in GE 6.0 Enterprise):

qconf -mconf # global GE config

enforce_user auto

auto_user_fshare 100

qconf -msconf # scheduler config

weight_tickets_functional 10000

# fair share only schedule job within its 0.x level

# each urgency of 1000 add 0.1 to the priority value,

# thus a high priority job will be be affected by fair share of default job.

# any 0.6xx level job will run to completion before any 0.5xx job will be scheduled to run.

# eg use, can declare project priority so any job submitted with the project code will get higher priority and not be penalized by previous run and fair share...

qconf -tsm # show scheduler info with explanation of historical usage weight

# very useful in share-tree scheduling

# (which reduce priority based on historical half-life usage of the cluster of a given user)

# straight forward functional share ignore history (less explaining to user)

# It is a single-time run when the scheduler next execution

# log should be stored in $sge_root/$cell/common/schedd_runlog

qping ...

tentakel "ping -c 1 192.168.1.1"

# run ping aginast head node IP on all nodes (setup by ROCKS)

Disabling an Execution Host

Method 1

If you're running 6.1 or better, here's the best way.

- Create a new hostgroup called @disabled (qconf -ahgrp @disabled).

- Create a new resource quota set via "qconf -arqs limit hosts @disabled to slots=0" (check with qconf -srqs).

- Now, to disable a host, just add it to the host group (

qconf -aattr hostgroup hostlist compute-1-16 @disabled ).

- To reenable the host, remove it from the host group (

qconf -dattr hostgroup hostlist compute-1-16 @disabled ).

- Alternatively, can update disabled hosts by doing qconf -mhgrp @disabled, placing NONE in the hostlist when there are no more nodes in this disabled hostgroup.

This process will stop new jobs from being scheduled to the machine and allow the currently running jobs to complete.

- To see which nodes are in this disabled host group:

qconf -shgrp @disabled

- The disabled hosts rqs can be checked as

qconf -srqs and should looks like

{

name disabled_hosts

description "Disabled Hosts via slots=0"

enabled TRUE

limit hosts {@disabled} to slots=0

}

Ref:

http://superuser.com/questions/218072/how-do-i-temporarily-take-a-node-out-from-sge-sun-grid-engine

Method 2

qmod -d \*@worker-1-16 # disable node compute-1-1 from accepting new jobs in the any/all queues.

qmod -d all.q@worker-1-16 # disable node compute-1-1 from accepting new jobs in the all.q queue.

qmod -d worker-1-16 # disable node compute-1-1, current job finish, no new job can be assigned.

qstat -j # can check if nodes is disabled or full from scheduler consideration

qstat -f # show host status.

qstat -f | grep d$ # look for disabled node (after reboot, even if crashed by memory, sge/uge disable node)

qmod -e all.q@worker-1-16 # re-enable node

qmod -cq default.q@worker-5-4 # remove the "E" state of the node.

Method 3

This will remove the host right away, probably killing jobs running on it.

Thus, use only when host is idle.

But it should be the surest way to remove the node.

qconf -de compute-1-16 # -de = disable execution host (kill running jobs!)

qconf -ae compute-1-16 # re-add it when maintenance is done.

Pausing queue

pausing the queue means all existing jobs will continue to run, but jobs qsub after queue is disabled will just get queued up until it is re-enabled. ref: http://www.rocksclusters.org/roll-documentation/sge/4.2.1/managing-queues.html

qmod -d default.q # this actually place every node serving the queue in "d" state. check with qstat -f

qmod -e default.q

Admin howto

Add Submit Host

See all exec node in cluster:

qconf -sel

Add submit host:

qconf -as hostname

# note that execution and submit host reverse IP to DNS must lookup and match

# workstations can be submit host, they will not be running sgeexecd.

# submit host don't need special software, but would need qsub to submit.

# though often, sge client package is installed

# /etc/profile.d/settings.*sh will be added for configurations. minimally:

# SGE_CLUSTER_NAME=sge1

# SGE_QMASTER_PORT=6444

# optional?:

# SGE_CELL=default

# SGE_EXECD_PORT=6445

# SGE_ROOT=/cm/shared/apps/sge/current

# submit host need to know who the master is.

# ie /etc/hosts need entry for the cluster head node external IP

Add Execution Host (ie compute node)

Add an execution host to the system, modeled after an existing node:

qconf -ae TemplateHostName

# the TemplateHostName is an existing node which the new addition will be model after (get their complex config, etc)

# command will open in vi window. change name to new hostname, edit whatever complex etc. save and exit.

qconf -Ae node-config.txt

# -A means add node according to the spec from a file

# the format of the file can be the cut-n-paste from what the vi window have when doing qconf -ae

example of node-config.txt ::

hostname node-9-16.cm.cluster

load_scaling NONE

complex_values m_mem_free=98291.000000M,m_mem_free_n0=49152.000000M, \

m_mem_free_n1=49139.093750M,ngpus=0,slots=40

user_lists NONE

xuser_lists NONE

projects NONE

xprojects NONE

usage_scaling NONE

report_variables NONE

## above is cut-n-paste of qconf -ae node-1-1 + edit

## add as qconf -Ae node-config.txt

A way to script adding a bunch of nodes at the same time. create a shell script with the following, and run it:

(fix slots to match the number of cpu cores you have. Memory should be fixed by UGE.

for X in $(seq 1 16 ) ; do

echo "

hostname compute-2-$X

load_scaling NONE

complex_values m_mem_free=98291.000000M,m_mem_free_n0=49152.000000M, \

m_mem_free_n1=49139.093750M,ngpus=0,slots=28

user_lists NONE

xuser_lists NONE

projects NONE

xprojects NONE

usage_scaling NONE

report_variables NONE " > compute-2-$X.qc

qconf -Ae compute-2-$X.qc

rm compute-2-$X.qc

done

qhost -F gpu | grep -B1 ngpus=[1-9] # list host with at least 1 GPU installed

qconf -as hostname.fqdn

$ -as = add submit host

# note that execution and submit host rever IP to DNS must lookup and match

# remove a workstation form a workstation.q

# (needed before the execution host can be removed)

qconf -mhgrp @wkstn

# (open in vi env, edit as desired, moving whatever host that should not be in the grp anymore)

remove execution host (think this will delete/kill any running job )

qconf -de hostname

# groups are member of queue

# host are member of group? or also to queue?

# node doesn't want to run any job. Check ExecD:

# login to the node that isn't running.

# don't do on head node!!

/etc/init.d/sgeexecd.clustername stop

# if don't stop, kill the process

/etc/init.d/sgeexecd.clustername start

# slots are defined in the queue, and also for each exec host.

#

# typically define slot based on cpu-cores, which are added to group named after them, eg quad, hex

qconf -mq default.q

# vi, edit desired default slots, or slot avail in a given machine explictly.

# eg

slots 8,[node-4-9.cm.cluster=24], \

[node-4-10.cm.cluster=20],[node-4-11.cm.cluster=20], \

[node-1-3.cm.cluster=12]

qconf -se node-4-10 # see slots and other complex that the named execution host has

Cgroups

cgroups_params connects the configuration of cgroups feature. This need to be set via qconf -mconf. UGE 8.1.7 and later, and config may need to be added by hand if hot-upgraded the system.

cgroups_params cgroup_path=/cgroup cpuset=false m_mem_free_hard=true

Places where (CPU) slots are defined

(Not sure of order of precedence yet)

- Queue config, ie qconf -mq. slots clause NEED(?) to specify which compute nodes has how many slots.

- qconf -se NodeName

- processors clause

- slots=n definable in complex values clause.

- num_proc=n in load_values clause

- m_core=n in load_values clause

- m_thread=n in load_values clause

- m_socke=n in load_values clause